Exploring Australian indigenous bible translation

Everyone deserves to have access to the bible in their native language.

Given the large number of Australian indigenous languages, I wanted to see how far data could take me towards answering the question Which Australian indigenous language group is most in need of bible translation?

It turns out that data can go a long way. As to the question itself:

Spoiler: It’s the Kukatja people in Western Australia.

I answered this question by writing some software to aggregate data on bible translation efforts, language groups, language relationships, speaker count and locations and English language competency. I used data from the Joshua Project, the World Atlas of Language Structure (WALS), the 2011 Australian Census, SIL’s ISO language code mappings, Find A Bible, AIATSIS AUSTLANG and Tindale’s Catalogue of Australian Aboriginal Tribes and after a bit of recent inspiration and rework I’ve published the output from the tool. Keep in mind that this was written as a proof-of-concept - the user interface has plenty of rough edges, and the data has only had limited review.

You can see it at https://language-explorer.wordspeak.org.

Why the Kukatja?

Most importantly, they don’t have any of the bible in their native language. Given the first book of the bible to be translated is usually one of the gospels, a language group with even a single book has access to the key parts of the message of Jesus. Given everyone should have some chance to read about Jesus, it’s important to prioritise a giving that first opportunity. Secondly, while the Kukatja aren’t the largest language group without bible access (that would be the Jaru) their low (self-assessed) ability in Australia’s national language, English, means that they can’t use an English-language bible either, so they’re quite stuck.

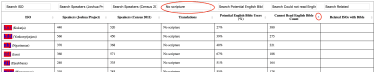

Here’s output from the software showing only language groups without a bible (filter by No Scripture) and sorted by count of non English language readers.

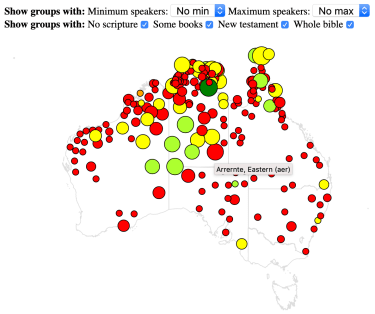

The software also has a map of language groups, with size corresponding to speaker count and colour showing scripture access (red circles are groups without any scripture, yellow circles are groups with only a single book of scripture)

The data shown in the software is a few years old, so it’s quite possible that the Kukatja and the Jaru people have a bible translation underway, but I think there’s promise in combining a data-driven approach with God’s leading as we work towards giving everyone access to the bible in their native language. If you’re interested in current Australian indigenous bible translation efforts, take a look at Aboriginal Bibles.

The source code for the software is available on Github